Background

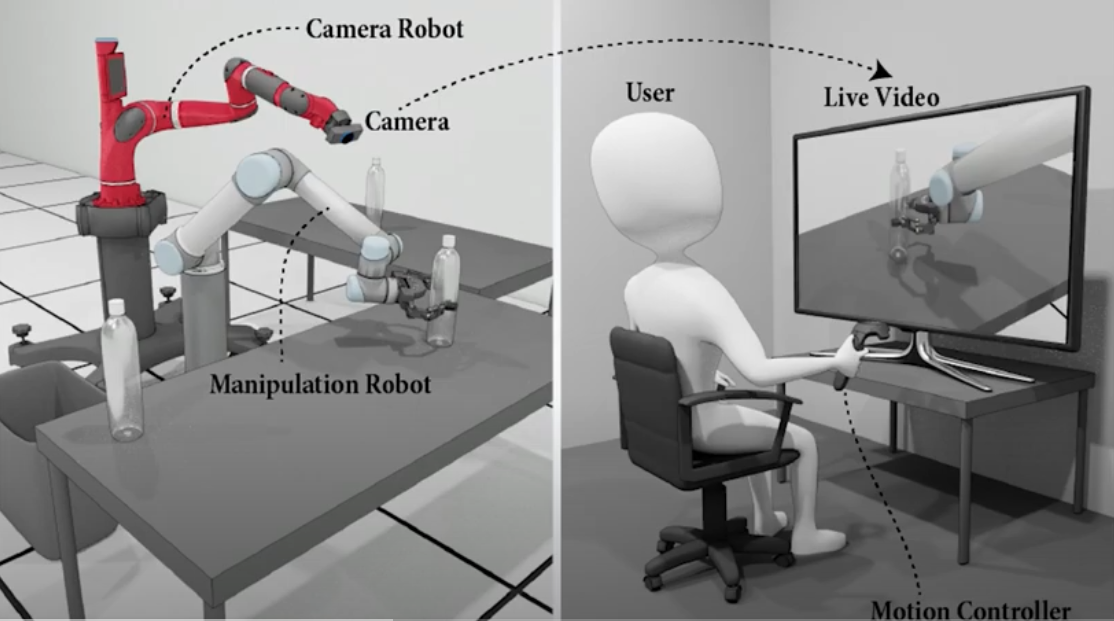

One of our group members, Haochen, has been working with an optimization-based motion planning framework named Relaxed IK (Inverse Kinematics) in our department's robotics lab. The camera in-hand robot driven by Relaxed IK is able to adapt its viewpoint in visually complex environments. It improves the ability of remote users to teleoperate a manipulation robot arm by continuously providing the users with an effective viewpoint using a second camera in-hand robot arm.